Machine Learning: LSTM

LSTM: Long Short Term Memory networks

cite Understanding-LSTMs Learning-Memory-Access-Patterns

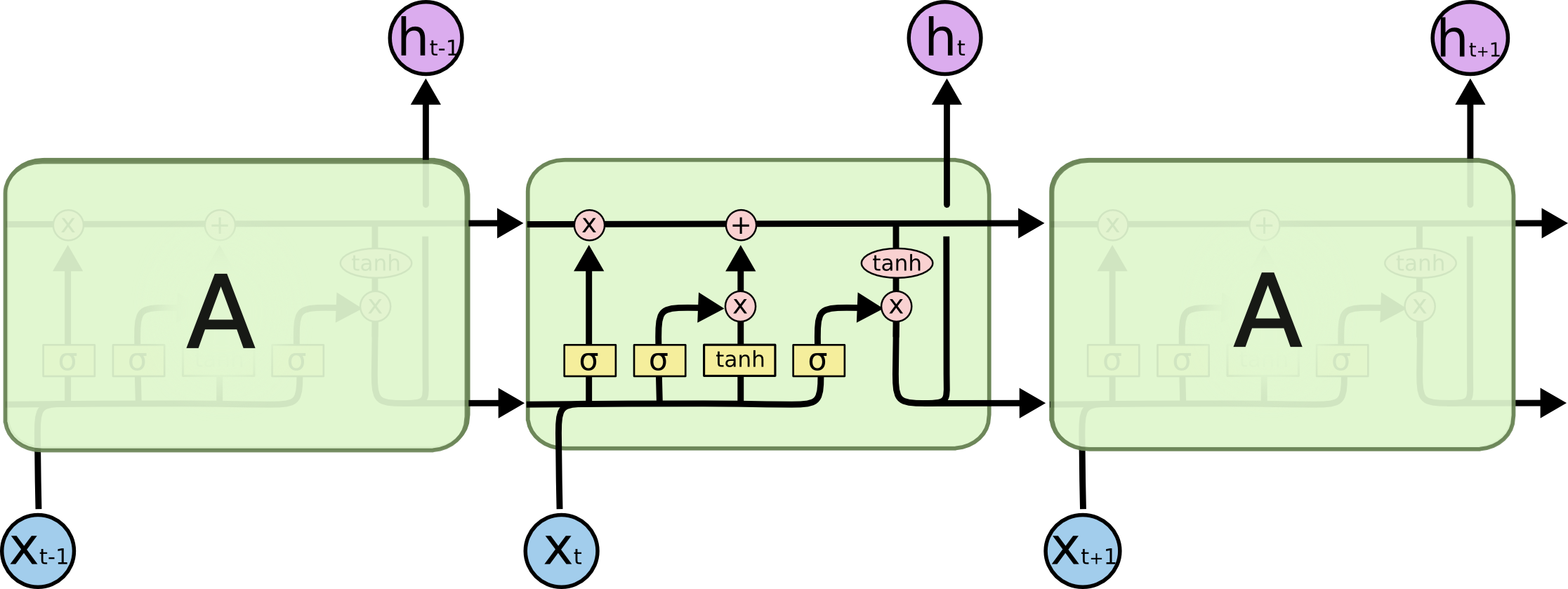

An LSTM is composed of a hidden state $h$ and a cell state $c$, along with input $i$, forget $f$ , and output gates $o$ that dictate what information gets stored and propagated to the next timestep.

注:[ ] is for concatenate 连接的意思

- forgot or inherit: decide what cell state information get from before.

$$f_t=\sigma\left(W_f\cdot\left[h_{t-1},x_t\right]+b_f\right)$$ - input new: decide what new information influence the cell state.

$$i_t=\sigma\left(W_i\cdot\left[h_{t-1},x_t\right]+b_i\right)$$

$$\widetilde{C_t}=\tanh\left(W_C\cdot\left[h_{t-1},x_t\right]+b_C\right)$$ - calculate new cell state

$$C_t=f_t*C_{t-1}+i_t * \widetilde{C_t}$$ - decide the output/hidden state which depends on the current cell state and current input.

$$o_t=\sigma\left(W_o\cdot\left[h_{t-1},x_t\right]+b_o\right)$$

$$h_t=o_t*\tanh(C_t)$$